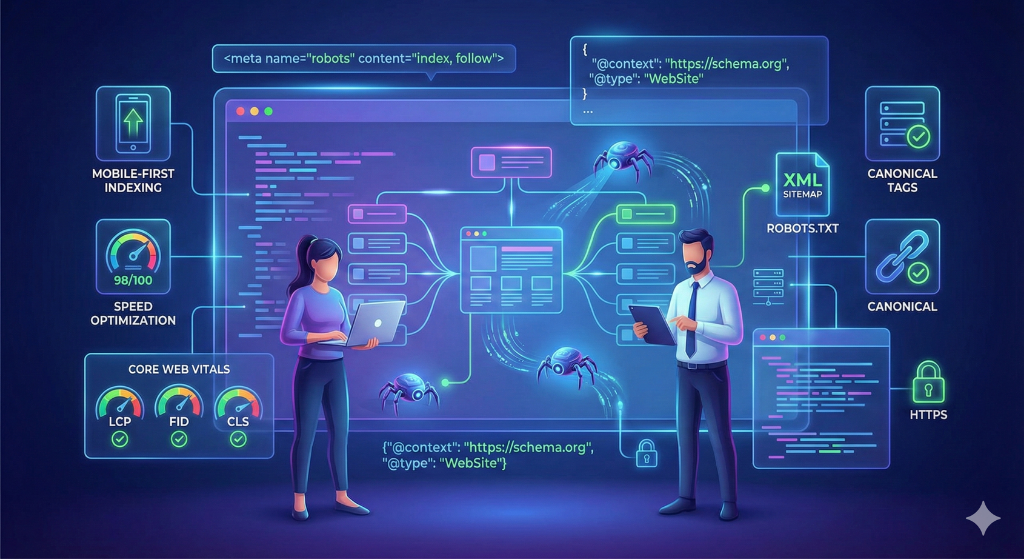

Search engine optimization isn’t just about content and keywords. Technical SEO the behind-the-scenes infrastructure that determines whether search engines can find, crawl, index, and understand your website forms the foundation of any successful SEO strategy. Content creators can write brilliant articles, but if technical implementation is flawed, that content may never appear in search results.

Developers play crucial roles in technical SEO implementation, yet many developers haven’t received formal training in SEO principles. Marketing teams focus on content and keywords while assuming technical foundations are solid. This disconnect creates situations where beautifully designed websites with excellent content perform poorly in search results due to fixable technical issues.

The gap between development and SEO often stems from different priorities. Developers prioritize functionality, user experience, and code quality. SEO professionals focus on visibility, crawlability, and search engine understanding. Both perspectives are essential, and the best results come from combining them.

After working on hundreds of websites where technical SEO issues were blocking otherwise excellent content from ranking, we’ve identified the technical elements developers must understand and implement correctly. This checklist covers the essential technical SEO knowledge every developer needs to build search engine-friendly websites that give content the best possible chance of ranking.

Understanding How Search Engines Work

Before diving into specific technical requirements, understanding how search engines discover, process, and rank content helps explain why technical SEO matters.

Crawling is how search engines discover content. Search engine bots (Googlebot for Google, Bingbot for Bing) follow links from known pages to discover new pages. If bots can’t reach pages due to broken links, blocked resources, or complicated navigation those pages won’t be indexed.

Rendering processes JavaScript and dynamic content. Modern search engines can execute JavaScript, but it requires more resources than processing static HTML. Heavy JavaScript applications or poor implementation can prevent search engines from seeing content even when human visitors see it fine.

Indexing stores discovered content in search engines’ databases. Search engines analyze content, extract meaning, identify topics and keywords, and store information for retrieval when users search. Technical issues can prevent indexing or cause search engines to misunderstand what pages are about.

Ranking determines which pages show for which searches. Hundreds of ranking factors influence positions, many of which developers directly control: site speed, mobile experience, structured data, security, and technical implementation quality.

Understanding this process helps developers see how their technical decisions affect search visibility. Every technical choice server configuration, JavaScript framework, URL structure, redirect handling potentially impacts whether content reaches users through search engines.

Site Architecture and URL Structure

How you structure websites and URLs affects both search engines’ ability to understand site organization and users’ ability to navigate effectively.

Logical hierarchy organizes content in clear parent-child relationships. Homepage connects to main category pages, which connect to subcategory pages, which connect to individual content pages. This hierarchical structure helps search engines understand content relationships and relative importance.

Flat site structures where every page is one click from homepage confuse importance signals. Deep structures requiring five or six clicks to reach content bury pages too deeply. Target three to four levels maximum from homepage to deepest content.

URL structure should be descriptive, clean, and hierarchical. Good URLs look like: example.com/category/subcategory/page-name. They tell users and search engines what pages contain before even visiting.

Bad URLs look like: example.com/page?id=12345&cat=7. These query string URLs provide no information about content and create potential duplicate content issues if parameters generate multiple URLs for identical content.

Consistent URL patterns throughout sites help search engines understand structure. If blog posts use /blog/post-title/ format, maintain that format consistently rather than mixing /blog/post-title/, /post-title/, and /2024/01/post-title/.

Lowercase URLs prevent duplicate content issues since URLs are case-sensitive. Example.com/Page and example.com/page are technically different URLs. Enforce lowercase to avoid confusion and consolidate authority.

Hyphens separate words in URLs rather than underscores. While search engines handle both, hyphens are conventional for web URLs. Use page-name not page_name.

Avoid special characters beyond hyphens in URLs. Spaces, ampersands, and special characters require encoding (%20 for spaces), creating ugly, confusing URLs.

Keep URLs concise while remaining descriptive. Shorter URLs are easier to share and remember, but don’t sacrifice clarity for brevity. example.com/seo-guide is better than example.com/complete-comprehensive-guide-to-search-engine-optimization-for-beginners.

Canonical URL structure enforces single versions. Websites accessible via www.example.com, example.com, http://example.com, and https://example.com have four URLs for identical content. Choose one canonical version and redirect others.

HTTPS and Security

Security affects both user trust and search engine rankings. HTTPS is no longer optional it’s a ranking factor and user expectation.

SSL/TLS certificates encrypt data transmitted between browsers and servers, protecting sensitive information and preventing man-in-the-middle attacks. Implementing HTTPS requires obtaining SSL certificates and configuring servers to use them.

Free certificates from Let’s Encrypt or hosting providers eliminate cost barriers. There’s no excuse for not implementing HTTPS on modern websites.

HTTP to HTTPS redirects ensure users and search engines access secure versions. Configure 301 permanent redirects from http:// to https:// versions for all pages.

Mixed content warnings occur when HTTPS pages load resources (images, scripts, stylesheets) over HTTP. Browsers display security warnings that erode user trust. Ensure all resources load via HTTPS or protocol-relative URLs.

HSTS (HTTP Strict Transport Security) headers instruct browsers to only access sites via HTTPS, preventing protocol downgrade attacks. Implement HSTS after confirming HTTPS works correctly.

Secure cookies require the secure flag so they only transmit over HTTPS connections. Unset secure flags expose cookies to interception on HTTP connections.

Search Console verification for HTTPS versions ensures you’re monitoring the correct property. Add both HTTP and HTTPS versions to Google Search Console during migration, but focus on HTTPS performance post-migration.

HTTPS implementation is fundamental security and SEO requirement. Implement it properly before launch or migrate existing sites systematically to avoid ranking drops during transition.

Mobile Optimization and Responsive Design

Mobile-first indexing means Google predominantly uses mobile versions of content for indexing and ranking. Mobile optimization isn’t optional it’s primary consideration.

Responsive design adapts layouts to various screen sizes using CSS media queries. Content, HTML, and URLs remain identical across devices while presentation adjusts. This approach is Google’s recommended configuration because it’s simplest to maintain and presents identical content to users and search engines.

Viewport meta tag must be configured correctly: <meta name=”viewport” content=”width=device-width, initial-scale=1″>. This tag tells browsers to match screen widths and scale appropriately. Missing or incorrect viewport configuration prevents proper mobile rendering.

Touch targets should be adequately sized for finger interaction. Buttons, links, and interactive elements need minimum 48×48 pixel touch targets with adequate spacing. Tiny click targets frustrate mobile users and signal poor mobile experience to search engines.

Text readability without zooming requires appropriate font sizes. Minimum 16px font size for body text ensures readability on mobile devices. Text requiring zooming indicates mobile-unfriendly design.

Mobile page speed matters even more than desktop because mobile connections are often slower and mobile users are often on-the-go with less patience. Optimize images aggressively, minimize JavaScript, and eliminate render-blocking resources.

Mobile usability testing in Google Search Console identifies mobile-specific issues Google detects when crawling your site. Fix reported issues promptly to maintain mobile performance.

Separate mobile URLs (m.example.com) are no longer recommended. While technically possible with proper annotation, responsive design is simpler, less error-prone, and preferred by Google. If you currently use separate mobile URLs, consider migrating to responsive design.

Dynamic serving renders different HTML for mobile and desktop users from same URLs based on user agent detection. This approach is technically valid but more complex than responsive design and requires careful implementation to avoid issues.

Mobile optimization directly impacts rankings under mobile-first indexing. Developers must prioritize mobile experience in design and implementation decisions.

Page Speed and Core Web Vitals

Page speed affects user experience, conversion rates, and search rankings. Core Web Vitals specific metrics Google uses for ranking provide standardized performance measurements.

Largest Contentful Paint (LCP) measures loading performance. LCP should occur within 2.5 seconds of page start. This metric captures when the largest visible element in viewport renders, representing when users perceive page has loaded.

Improve LCP by optimizing images (compression, appropriate formats, lazy loading), removing render-blocking resources, optimizing server response times, and implementing efficient caching.

First Input Delay (FID) measures interactivity. FID should be under 100 milliseconds. This metric captures delay between user interaction (clicking button, tapping link) and browser response.

Improve FID by minimizing JavaScript execution time, breaking up long tasks, and removing or deferring non-critical JavaScript.

Cumulative Layout Shift (CLS) measures visual stability. CLS should be under 0.1. This metric captures unexpected layout shifts where content moves after initial render like when images load without dimensions specified, pushing content down.

Improve CLS by specifying dimensions for images and embeds, avoiding inserting content above existing content, and using transform animations rather than properties that trigger layout changes.

Server response time affects all performance metrics. Time to First Byte (TTFB) should be under 600 milliseconds. Slow servers delay everything. Optimize server performance through quality hosting, efficient code, database optimization, and caching.

Minification removes unnecessary characters from CSS and JavaScript files without changing functionality, reducing file sizes by 20-40% or more.

Compression with GZIP or Brotli reduces transmitted file sizes. Enable compression for HTML, CSS, JavaScript, and other text-based resources.

Image optimization through compression, appropriate formats (WebP over JPEG/PNG), and responsive images serving appropriately sized versions to different devices dramatically reduces page weight.

Lazy loading defers loading offscreen images until users scroll toward them, reducing initial page weight and improving load times.

Critical CSS inlines styles needed for above-the-fold content directly in HTML, letting below-the-fold CSS load asynchronously. This technique improves perceived performance by rendering visible content faster.

Content Delivery Networks (CDNs) serve static assets from geographically distributed servers, reducing latency for global audiences.

Performance optimization is continuous work, not one-time implementation. Regular testing and monitoring maintain performance as sites evolve. Our web development services prioritize performance from initial implementation through ongoing maintenance.

XML Sitemaps and Robots.txt

Sitemaps and robots.txt files guide search engine crawling, helping bots discover content efficiently and respect crawl restrictions.

XML sitemaps list URLs you want search engines to crawl and index. Sitemaps should include all important pages, exclude unimportant pages (login pages, admin areas, duplicate content), and organize by type if sites are large (separate sitemaps for blog posts, products, pages).

Sitemap structure uses XML format specifying URLs, last modification dates, change frequency, and priority. While search engines use this information as hints rather than directives, accurate sitemaps help crawl efficiency.

Sitemap submission to Google Search Console and Bing Webmaster Tools notifies search engines about sitemaps. Include sitemap location in robots.txt: Sitemap: https://example.com/sitemap.xml.

Sitemap updates should happen automatically when content changes. Dynamic sitemap generation ensures search engines always have current information about site content.

Large site sitemaps should be split into multiple files if they exceed 50,000 URLs or 50MB uncompressed. Use sitemap index files linking to individual sitemaps.

Robots.txt controls search engine crawling by allowing or disallowing paths. Place robots.txt at site root: https://example.com/robots.txt.

Disallow directives prevent crawling specific paths: Disallow: /admin/ blocks the admin directory. Use disallow for truly private content, not for content you want indexed but hidden from navigation.

Allow directives override disallow rules for subdirectories: disallow /directory/ but allow /directory/public/.

Crawl-delay is respected by some bots (not Googlebot) to limit crawl rate. Use sparingly only when server load from crawling creates problems.

Common robots.txt mistakes include blocking CSS or JavaScript files. Search engines need these resources to render pages properly. Never block CSS or JS in robots.txt.

Testing robots.txt in Google Search Console’s robots.txt tester verifies rules work as intended before deploying to production.

Structured Data and Schema Markup

Structured data helps search engines understand content meaning, enabling rich results that stand out in search listings.

Schema.org vocabulary provides standardized formats for marking up content types: articles, products, events, recipes, local businesses, and hundreds more.

JSON-LD format is Google’s recommended structured data implementation. JSON-LD embeds structured data in <script type=”application/ld+json”> tags, keeping markup separate from visible content.

Microdata is an alternative format embedding structured data directly in HTML using itemscope and itemprop attributes. While valid, JSON-LD is simpler and recommended.

Common schema types for business websites include Organization (company information), LocalBusiness (for businesses with physical locations), Article (blog posts and articles), Product (for e-commerce), and BreadcrumbList (navigation breadcrumbs).

Testing structured data with Google’s Rich Results Test validates markup and previews how rich results might appear. Fix errors and warnings before deploying.

Structured data monitoring in Google Search Console tracks errors and enhancements, showing which structured data Google detects and any issues requiring attention.

Common mistakes include marking up content not visible on pages (misleading users and violating guidelines), using irrelevant schema types, or providing inaccurate information in structured data that contradicts visible content.

Structured data doesn’t directly improve rankings but enables rich results featured snippets, product listings with pricing, event listings, recipe cards that improve click-through rates substantially.

Canonical Tags and Duplicate Content

Duplicate content confuses search engines about which version to index and rank. Canonical tags clarify which version is primary.

Canonical URL is the preferred version of a page when multiple URLs contain identical or very similar content. Specify canonical URLs with <link rel=”canonical” href=”https://example.com/preferred-url/”> tags in page heads.

Self-referencing canonicals point to themselves even for unique pages. This practice prevents parameter-based duplicate content and clearly signals preferred URLs.

Cross-domain canonicals indicate content republished from other sites. If you syndicate content to partner sites, they should canonical to your original version, preserving your authority.

Canonical mistakes include pointing canonicals to wrong pages, creating canonical chains (A canonicals to B, B canonicals to C), or canonicalizing to noindex pages.

Pagination handling for multi-page content traditionally used rel=”next” and rel=”prev” attributes, but Google no longer uses these. Instead, use canonical tags pointing to view-all versions or self-referencing canonicals on individual pages.

Parameter handling in Google Search Console lets you tell Google how to treat URL parameters. Mark tracking parameters (utm_source, etc.) as “doesn’t change content” so Google treats URLs with these parameters as identical.

Faceted navigation on e-commerce sites creates massive duplicate content potential. Products sorted by different facets (price, color, size) generate unique URLs for similar content. Strategic noindex or canonical use prevents these combinations from creating duplicate content issues.

Redirects and Status Codes

Proper redirect and HTTP status code implementation maintains authority when URLs change and communicates page status clearly to search engines.

301 redirects permanently move pages. Use 301 when content permanently relocates to new URLs. Search engines transfer most ranking signals from old URLs to new ones.

302 redirects temporarily move pages. Use 302 only for genuinely temporary situations like A/B testing or seasonal redirects. Search engines don’t transfer ranking signals from temporary redirects.

404 errors indicate pages don’t exist. Some 404s are normal and fine (deleted content, mistyped URLs). Excessive 404s indicate problems requiring attention: broken links, missing pages, or site architecture issues.

410 errors indicate pages permanently deleted. 410 tells search engines pages are gone intentionally and won’t return. Use 410 instead of 404 for deliberately removed content.

Redirect chains occur when URL A redirects to B, which redirects to C. Search engines may not follow entire chains, losing authority along the way. Redirect directly from A to C whenever possible.

Redirect loops happen when pages redirect to each other infinitely. These break sites completely. Test redirects carefully to prevent loops.

Soft 404s occur when servers return 200 (success) status for nonexistent pages while displaying “not found” messages. Search engines waste resources crawling soft 404s. Ensure missing pages return proper 404 status codes.

Regular redirect audits identify opportunities to consolidate redirect chains and fix redirect issues. Tools like Screaming Frog SEO Spider find redirect problems efficiently.

International SEO and Hreflang

Websites serving multiple languages or countries need special technical implementation to prevent duplicate content issues and target content correctly.

Hreflang tags tell search engines which language and country versions to show different users. Implement hreflang in page heads, HTTP headers, or XML sitemaps.

Hreflang syntax: <link rel=”alternate” hreflang=”en-us” href=”https://example.com/us/”> specifies USA English version. <link rel=”alternate” hreflang=”en-gb” href=”https://example.com/uk/”> specifies UK English version.

Bi-directional hreflang requires pages to specify all language versions including themselves. Every page’s hreflang tags should list all available language versions.

X-default hreflang specifies default version for users whose language preferences don’t match any specified alternatives: <link rel=”alternate” hreflang=”x-default” href=”https://example.com/”>.

Country-specific vs. language-specific hreflang must match implementation. Use language codes (en, de, fr) for generic languages or country codes (en-us, en-gb) for country-specific versions. Don’t mix approaches.

Common hreflang mistakes include forgetting return tags (Page A references Page B but Page B doesn’t reference Page A), incorrect language codes, or hreflang conflicts with canonical tags.

URL structure for international sites typically uses subdirectories (example.com/en/, example.com/de/), subdomains (en.example.com, de.example.com), or country-code top-level domains (example.co.uk, example.de). Each approach has trade-offs for authority, maintenance, and technical complexity.

JavaScript SEO Considerations

Modern web development increasingly relies on JavaScript frameworks, creating challenges for search engine crawling and indexing.

Server-side rendering (SSR) generates HTML on servers before sending to browsers and search engines. SSR ensures search engines receive fully rendered HTML, eliminating rendering challenges.

Client-side rendering sends minimal HTML to browsers with JavaScript building content dynamically. While modern search engines execute JavaScript, this approach creates rendering delays and potential issues.

Static site generation (SSG) pre-renders pages at build time, creating static HTML files. This approach provides SSR benefits for SEO while maintaining performance advantages of static serving.

Hybrid approaches combine techniques, using SSG for mostly static content and SSR for dynamic content. Next.js and similar frameworks support hybrid rendering.

JavaScript framework choice affects SEO complexity. React, Angular, and Vue can all work for SEO with proper implementation, but SSR or SSG is generally recommended for content-focused sites.

Progressive enhancement builds basic functionality in HTML with JavaScript adding enhancements. This approach ensures core content is accessible even if JavaScript fails or hasn’t executed yet.

Content visibility testing confirms search engines see content by testing pages in Google Search Console’s URL Inspection tool. If content doesn’t appear in rendered HTML view, search engines can’t index it.

Loading states should avoid showing “Loading…” messages to search engines. Implement loading states that don’t block initial content rendering.

JavaScript errors can prevent rendering and content access. Monitor JavaScript errors and fix them promptly to ensure functionality works for search engines.

Working with experienced web developers who understand both modern JavaScript frameworks and SEO requirements ensures technical implementation supports search visibility.

Log File Analysis and Crawl Budget

Understanding how search engines actually crawl your site helps optimize for efficient crawling and resource allocation.

Server logs record every request to your server, including search engine bot visits. Analyzing logs reveals crawling patterns, issues, and opportunities.

Crawl budget is the number of pages search engines crawl within given timeframes. Large sites might have pages that rarely get crawled, while smaller sites have sufficient crawl budget for all pages.

Crawl efficiency measures how many crawled pages actually matter. If bots waste crawl budget on unimportant pages (faceted navigation, infinite scroll, duplicate content), important pages get crawled less frequently.

Crawl frequency optimization identifies pages that should be crawled more often (frequently updated content, key landing pages) and pages crawled too often (rarely changing pages, unimportant pages).

Googlebot smartphone vs. desktop analysis shows whether Google predominantly crawls mobile or desktop versions. Under mobile-first indexing, smartphone crawling should dominate.

Crawl errors in logs reveal which URLs return errors to bots even if they appear fine to users, helping identify server issues or blocking problems.

Log file analyzers like Screaming Frog Log File Analyzer, Botify, or OnCrawl automate analysis, providing insights about crawling behavior and issues.

Developer-SEO Collaboration Best Practices

Technical SEO succeeds through collaboration between developers and SEO professionals rather than siloed work.

Involve developers early in SEO planning rather than asking them to fix technical issues after content launches. Upfront involvement prevents building SEO barriers into sites.

Provide context for technical requirements. Developers implement solutions better when understanding why requirements matter, not just what needs implementing.

Document technical requirements clearly in specifications, wireframes, and tickets. Vague “make it SEO-friendly” requests lead to incomplete implementations.

Test before launch in staging environments, allowing developers to fix issues before they affect live sites and rankings.

Monitor after launch for technical issues introduced by updates or changes, addressing problems quickly before they significantly impact rankings.

Share data and insights between teams. Developers provide technical knowledge about how implementations work; SEO professionals provide data about what’s working and what needs improvement.

Establish processes for technical changes affecting SEO. Require SEO review for URL structure changes, site architecture modifications, or major technical implementations.

Effective collaboration produces technically sound, SEO-friendly websites that give content the best chance of ranking and attracting organic traffic.

Common Technical SEO Mistakes Developers Make

Understanding common mistakes helps developers avoid pitfalls that hurt search visibility.

Blocking CSS or JavaScript in robots.txt prevents search engines from properly rendering pages. Never block resources search engines need for rendering.

Missing or incorrect canonical tags create duplicate content issues. Every page needs canonical tags either self-referencing or pointing to preferred versions.

Slow page speed from unoptimized images, render-blocking resources, or inefficient code hurts rankings and user experience. Prioritize performance.

Breaking mobile experience through poor responsive implementation or assuming desktop experience suffices creates problems under mobile-first indexing.

Incorrect status codes like returning 200 for nonexistent pages (soft 404s) waste crawl budget and confuse search engines.

JavaScript-dependent navigation where navigation only works with JavaScript enabled prevents search engines from discovering content through menus.

Noindexing important pages accidentally blocks content from indexing. Carefully check staging site directives don’t carry over to production.

Missing structured data misses opportunities for rich results that improve click-through rates and visibility.

Ignoring international implementation for multi-language or multi-country sites creates duplicate content issues and poor targeting.

Not testing implementations leads to SEO-breaking mistakes going unnoticed until rankings suffer. Test thoroughly in staging before deploying.

Technical SEO Tools for Developers

Specialized tools help developers identify, diagnose, and fix technical SEO issues efficiently.

Google Search Console is essential for monitoring search performance, identifying technical issues, and testing implementations. Every website should be verified and actively monitored.

Google PageSpeed Insights measures performance and provides specific optimization recommendations including Core Web Vitals performance.

Screaming Frog SEO Spider crawls websites like search engines, identifying broken links, redirect chains, missing metadata, and numerous technical issues.

Lighthouse (built into Chrome DevTools) audits performance, accessibility, SEO, and best practices, providing scores and recommendations.

Google Mobile-Friendly Test verifies mobile optimization and identifies mobile-specific issues.

Google Rich Results Test validates structured data and previews how rich results might appear.

GTmetrix analyzes performance with detailed waterfall charts showing exactly how pages load and what slows them.

WebPageTest provides advanced performance testing with options for different locations, browsers, and connection speeds.

Regular use of these tools helps developers maintain technical SEO health and identify issues before they significantly impact search visibility.

Moving Forward with Technical SEO

Technical SEO isn’t optional or secondary it’s the foundation enabling content to reach users through search engines. Brilliant content on technically flawed websites won’t rank, while mediocre content on technically excellent websites often outperforms superior content with technical problems.

Developers who understand and implement proper technical SEO give their websites and clients significant competitive advantages. In markets where content quality is similar across competitors, technical implementation differences often determine who ranks and who doesn’t.

This checklist covers essential technical SEO knowledge developers need. Not every item applies to every website, but understanding these concepts helps you make informed technical decisions that support search visibility rather than inadvertently blocking it.

Technical SEO is ongoing practice rather than one-time implementation. Search engines evolve, best practices change, and new technologies emerge. Staying current with technical SEO requirements maintains search visibility as the web evolves.

Whether you’re building websites professionally or working with SEO services to optimize existing sites, understanding technical SEO helps bridge development and marketing, creating websites that look great, work well, and actually reach users through search engines.

Need help with technical SEO implementation or audit? Our team combines development expertise with SEO knowledge to build and optimize technically sound websites that rank well. Contact us to discuss your technical SEO needs.